+

+[](https://github.com/features/actions)

+[](https://codecov.io/gh/swaggo/swag)

+[](https://goreportcard.com/report/github.com/swaggo/swag)

+[](https://codebeat.co/projects/github-com-swaggo-swag-master)

+[](https://godoc.org/github.com/swaggo/swag)

+[](#backers)

+[](#sponsors) [](https://app.fossa.io/projects/git%2Bgithub.com%2Fswaggo%2Fswag?ref=badge_shield)

+[](https://github.com/swaggo/swag/releases)

+

+

+Swag converts Go annotations to Swagger Documentation 2.0. We've created a variety of plugins for popular [Go web frameworks](#supported-web-frameworks). This allows you to quickly integrate with an existing Go project (using Swagger UI).

+

+## Contents

+ - [Getting started](#getting-started)

+ - [Supported Web Frameworks](#supported-web-frameworks)

+ - [How to use it with Gin](#how-to-use-it-with-gin)

+ - [The swag formatter](#the-swag-formatter)

+ - [Implementation Status](#implementation-status)

+ - [Declarative Comments Format](#declarative-comments-format)

+ - [General API Info](#general-api-info)

+ - [API Operation](#api-operation)

+ - [Security](#security)

+ - [Examples](#examples)

+ - [Descriptions over multiple lines](#descriptions-over-multiple-lines)

+ - [User defined structure with an array type](#user-defined-structure-with-an-array-type)

+ - [Function scoped struct declaration](#function-scoped-struct-declaration)

+ - [Model composition in response](#model-composition-in-response)

+ - [Add a headers in response](#add-a-headers-in-response)

+ - [Use multiple path params](#use-multiple-path-params)

+ - [Example value of struct](#example-value-of-struct)

+ - [SchemaExample of body](#schemaexample-of-body)

+ - [Description of struct](#description-of-struct)

+ - [Use swaggertype tag to supported custom type](#use-swaggertype-tag-to-supported-custom-type)

+ - [Use global overrides to support a custom type](#use-global-overrides-to-support-a-custom-type)

+ - [Use swaggerignore tag to exclude a field](#use-swaggerignore-tag-to-exclude-a-field)

+ - [Add extension info to struct field](#add-extension-info-to-struct-field)

+ - [Rename model to display](#rename-model-to-display)

+ - [How to use security annotations](#how-to-use-security-annotations)

+ - [Add a description for enum items](#add-a-description-for-enum-items)

+ - [Generate only specific docs file types](#generate-only-specific-docs-file-types)

+ - [How to use Go generic types](#how-to-use-generics)

+- [About the Project](#about-the-project)

+

+## Getting started

+

+1. Add comments to your API source code, See [Declarative Comments Format](#declarative-comments-format).

+

+2. Install swag by using:

+```sh

+go install github.com/swaggo/swag/cmd/swag@latest

+```

+To build from source you need [Go](https://golang.org/dl/) (1.18 or newer).

+

+Alternatively you can run the docker image:

+```sh

+docker run --rm -v $(pwd):/code ghcr.io/swaggo/swag:latest

+```

+

+Or download a pre-compiled binary from the [release page](https://github.com/swaggo/swag/releases).

+

+3. Run `swag init` in the project's root folder which contains the `main.go` file. This will parse your comments and generate the required files (`docs` folder and `docs/docs.go`).

+```sh

+swag init

+```

+

+ Make sure to import the generated `docs/docs.go` so that your specific configuration gets `init`'ed. If your General API annotations do not live in `main.go`, you can let swag know with `-g` flag.

+ ```go

+ import _ "example-module-name/docs"

+ ```

+ ```sh

+ swag init -g http/api.go

+ ```

+

+4. (optional) Use `swag fmt` format the SWAG comment. (Please upgrade to the latest version)

+

+ ```sh

+ swag fmt

+ ```

+

+## swag cli

+

+```sh

+swag init -h

+NAME:

+ swag init - Create docs.go

+

+USAGE:

+ swag init [command options] [arguments...]

+

+OPTIONS:

+ --quiet, -q Make the logger quiet. (default: false)

+ --generalInfo value, -g value Go file path in which 'swagger general API Info' is written (default: "main.go")

+ --dir value, -d value Directories you want to parse,comma separated and general-info file must be in the first one (default: "./")

+ --exclude value Exclude directories and files when searching, comma separated

+ --propertyStrategy value, -p value Property Naming Strategy like snakecase,camelcase,pascalcase (default: "camelcase")

+ --output value, -o value Output directory for all the generated files(swagger.json, swagger.yaml and docs.go) (default: "./docs")

+ --outputTypes value, --ot value Output types of generated files (docs.go, swagger.json, swagger.yaml) like go,json,yaml (default: "go,json,yaml")

+ --parseVendor Parse go files in 'vendor' folder, disabled by default (default: false)

+ --parseDependency, --pd Parse go files inside dependency folder, disabled by default (default: false)

+ --markdownFiles value, --md value Parse folder containing markdown files to use as description, disabled by default

+ --codeExampleFiles value, --cef value Parse folder containing code example files to use for the x-codeSamples extension, disabled by default

+ --parseInternal Parse go files in internal packages, disabled by default (default: false)

+ --generatedTime Generate timestamp at the top of docs.go, disabled by default (default: false)

+ --parseDepth value Dependency parse depth (default: 100)

+ --requiredByDefault Set validation required for all fields by default (default: false)

+ --instanceName value This parameter can be used to name different swagger document instances. It is optional.

+ --overridesFile value File to read global type overrides from. (default: ".swaggo")

+ --parseGoList Parse dependency via 'go list' (default: true)

+ --tags value, -t value A comma-separated list of tags to filter the APIs for which the documentation is generated.Special case if the tag is prefixed with the '!' character then the APIs with that tag will be excluded

+ --templateDelims value, --td value Provide custom delimeters for Go template generation. The format is leftDelim,rightDelim. For example: "[[,]]"

+ --collectionFormat value, --cf value Set default collection format (default: "csv")

+ --state value Initial state for the state machine (default: ""), @HostState in root file, @State in other files

+ --help, -h show help (default: false)

+```

+

+```bash

+swag fmt -h

+NAME:

+ swag fmt - format swag comments

+

+USAGE:

+ swag fmt [command options] [arguments...]

+

+OPTIONS:

+ --dir value, -d value Directories you want to parse,comma separated and general-info file must be in the first one (default: "./")

+ --exclude value Exclude directories and files when searching, comma separated

+ --generalInfo value, -g value Go file path in which 'swagger general API Info' is written (default: "main.go")

+ --help, -h show help (default: false)

+

+```

+

+## Supported Web Frameworks

+

+- [gin](http://github.com/swaggo/gin-swagger)

+- [echo](http://github.com/swaggo/echo-swagger)

+- [buffalo](https://github.com/swaggo/buffalo-swagger)

+- [net/http](https://github.com/swaggo/http-swagger)

+- [gorilla/mux](https://github.com/swaggo/http-swagger)

+- [go-chi/chi](https://github.com/swaggo/http-swagger)

+- [flamingo](https://github.com/i-love-flamingo/swagger)

+- [fiber](https://github.com/gofiber/swagger)

+- [atreugo](https://github.com/Nerzal/atreugo-swagger)

+- [hertz](https://github.com/hertz-contrib/swagger)

+

+## How to use it with Gin

+

+Find the example source code [here](https://github.com/swaggo/swag/tree/master/example/celler).

+

+Finish the steps in [Getting started](#getting-started)

+1. After using `swag init` to generate Swagger 2.0 docs, import the following packages:

+```go

+import "github.com/swaggo/gin-swagger" // gin-swagger middleware

+import "github.com/swaggo/files" // swagger embed files

+```

+

+2. Add [General API](#general-api-info) annotations in `main.go` code:

+

+```go

+// @title Swagger Example API

+// @version 1.0

+// @description This is a sample server celler server.

+// @termsOfService http://swagger.io/terms/

+

+// @contact.name API Support

+// @contact.url http://www.swagger.io/support

+// @contact.email support@swagger.io

+

+// @license.name Apache 2.0

+// @license.url http://www.apache.org/licenses/LICENSE-2.0.html

+

+// @host localhost:8080

+// @BasePath /api/v1

+

+// @securityDefinitions.basic BasicAuth

+

+// @externalDocs.description OpenAPI

+// @externalDocs.url https://swagger.io/resources/open-api/

+func main() {

+ r := gin.Default()

+

+ c := controller.NewController()

+

+ v1 := r.Group("/api/v1")

+ {

+ accounts := v1.Group("/accounts")

+ {

+ accounts.GET(":id", c.ShowAccount)

+ accounts.GET("", c.ListAccounts)

+ accounts.POST("", c.AddAccount)

+ accounts.DELETE(":id", c.DeleteAccount)

+ accounts.PATCH(":id", c.UpdateAccount)

+ accounts.POST(":id/images", c.UploadAccountImage)

+ }

+ //...

+ }

+ r.GET("/swagger/*any", ginSwagger.WrapHandler(swaggerFiles.Handler))

+ r.Run(":8080")

+}

+//...

+```

+

+Additionally some general API info can be set dynamically. The generated code package `docs` exports `SwaggerInfo` variable which we can use to set the title, description, version, host and base path programmatically. Example using Gin:

+

+```go

+package main

+

+import (

+ "github.com/gin-gonic/gin"

+ "github.com/swaggo/files"

+ "github.com/swaggo/gin-swagger"

+

+ "./docs" // docs is generated by Swag CLI, you have to import it.

+)

+

+// @contact.name API Support

+// @contact.url http://www.swagger.io/support

+// @contact.email support@swagger.io

+

+// @license.name Apache 2.0

+// @license.url http://www.apache.org/licenses/LICENSE-2.0.html

+func main() {

+

+ // programmatically set swagger info

+ docs.SwaggerInfo.Title = "Swagger Example API"

+ docs.SwaggerInfo.Description = "This is a sample server Petstore server."

+ docs.SwaggerInfo.Version = "1.0"

+ docs.SwaggerInfo.Host = "petstore.swagger.io"

+ docs.SwaggerInfo.BasePath = "/v2"

+ docs.SwaggerInfo.Schemes = []string{"http", "https"}

+

+ r := gin.New()

+

+ // use ginSwagger middleware to serve the API docs

+ r.GET("/swagger/*any", ginSwagger.WrapHandler(swaggerFiles.Handler))

+

+ r.Run()

+}

+```

+

+3. Add [API Operation](#api-operation) annotations in `controller` code

+

+``` go

+package controller

+

+import (

+ "fmt"

+ "net/http"

+ "strconv"

+

+ "github.com/gin-gonic/gin"

+ "github.com/swaggo/swag/example/celler/httputil"

+ "github.com/swaggo/swag/example/celler/model"

+)

+

+// ShowAccount godoc

+// @Summary Show an account

+// @Description get string by ID

+// @Tags accounts

+// @Accept json

+// @Produce json

+// @Param id path int true "Account ID"

+// @Success 200 {object} model.Account

+// @Failure 400 {object} httputil.HTTPError

+// @Failure 404 {object} httputil.HTTPError

+// @Failure 500 {object} httputil.HTTPError

+// @Router /accounts/{id} [get]

+func (c *Controller) ShowAccount(ctx *gin.Context) {

+ id := ctx.Param("id")

+ aid, err := strconv.Atoi(id)

+ if err != nil {

+ httputil.NewError(ctx, http.StatusBadRequest, err)

+ return

+ }

+ account, err := model.AccountOne(aid)

+ if err != nil {

+ httputil.NewError(ctx, http.StatusNotFound, err)

+ return

+ }

+ ctx.JSON(http.StatusOK, account)

+}

+

+// ListAccounts godoc

+// @Summary List accounts

+// @Description get accounts

+// @Tags accounts

+// @Accept json

+// @Produce json

+// @Param q query string false "name search by q" Format(email)

+// @Success 200 {array} model.Account

+// @Failure 400 {object} httputil.HTTPError

+// @Failure 404 {object} httputil.HTTPError

+// @Failure 500 {object} httputil.HTTPError

+// @Router /accounts [get]

+func (c *Controller) ListAccounts(ctx *gin.Context) {

+ q := ctx.Request.URL.Query().Get("q")

+ accounts, err := model.AccountsAll(q)

+ if err != nil {

+ httputil.NewError(ctx, http.StatusNotFound, err)

+ return

+ }

+ ctx.JSON(http.StatusOK, accounts)

+}

+//...

+```

+

+```console

+swag init

+```

+

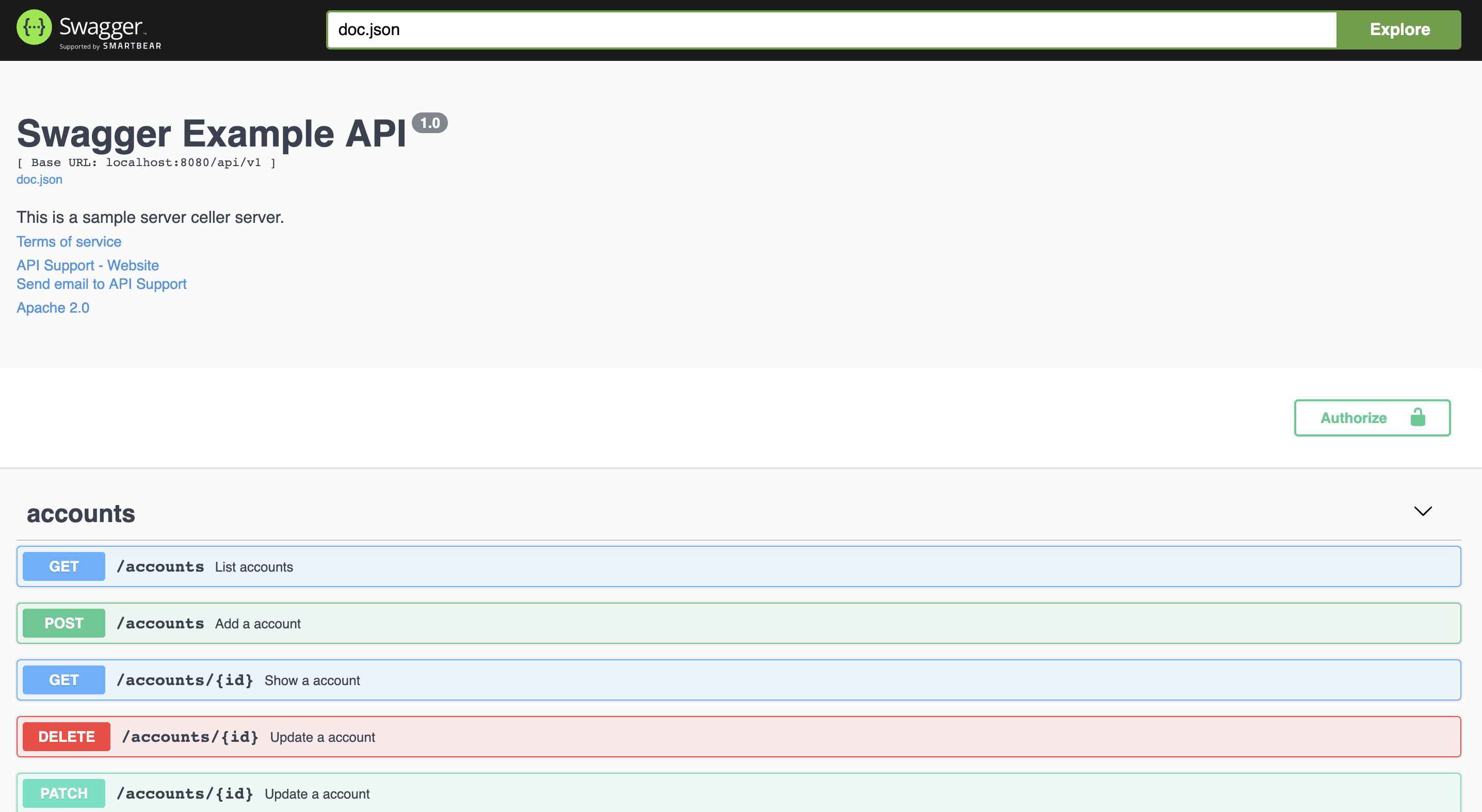

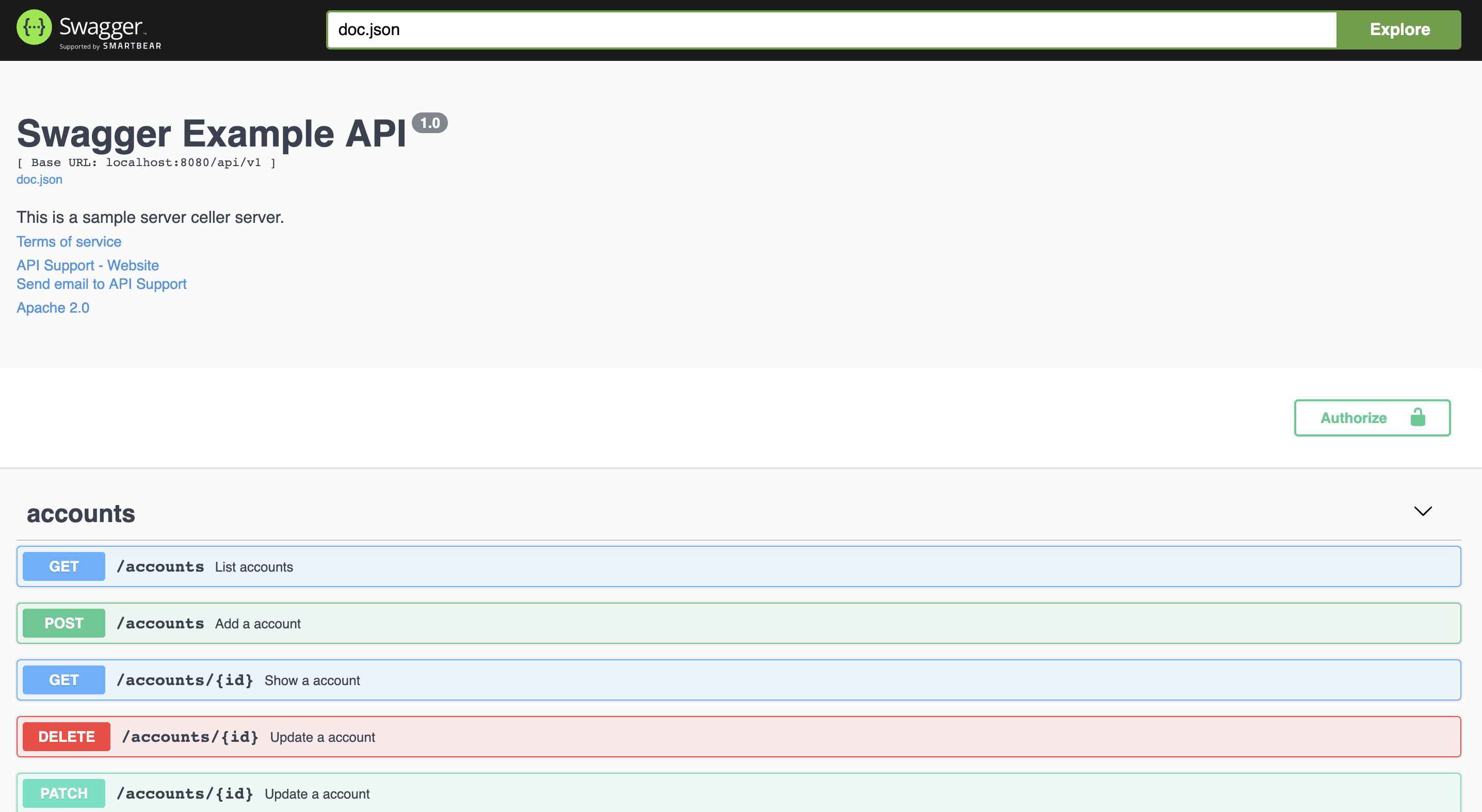

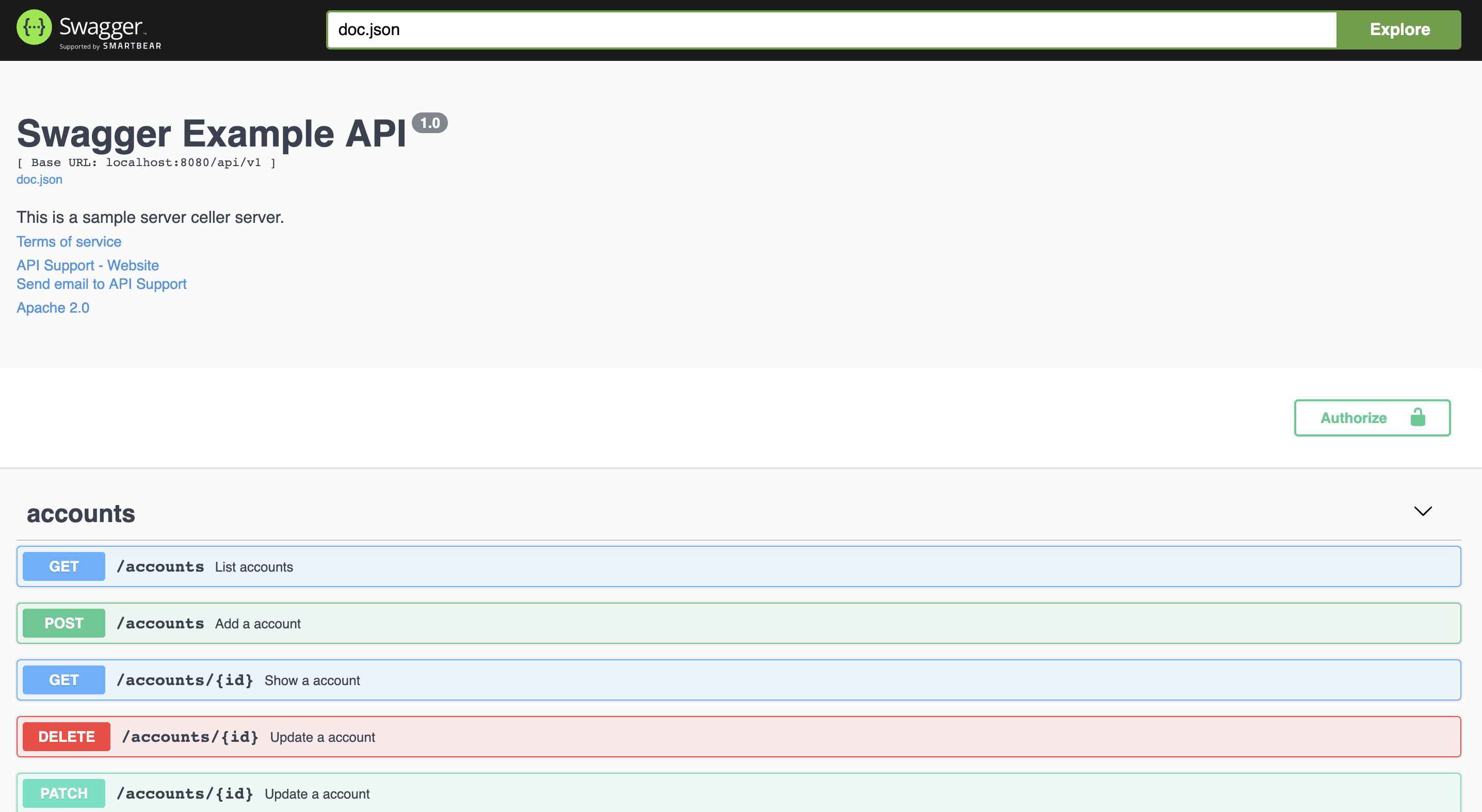

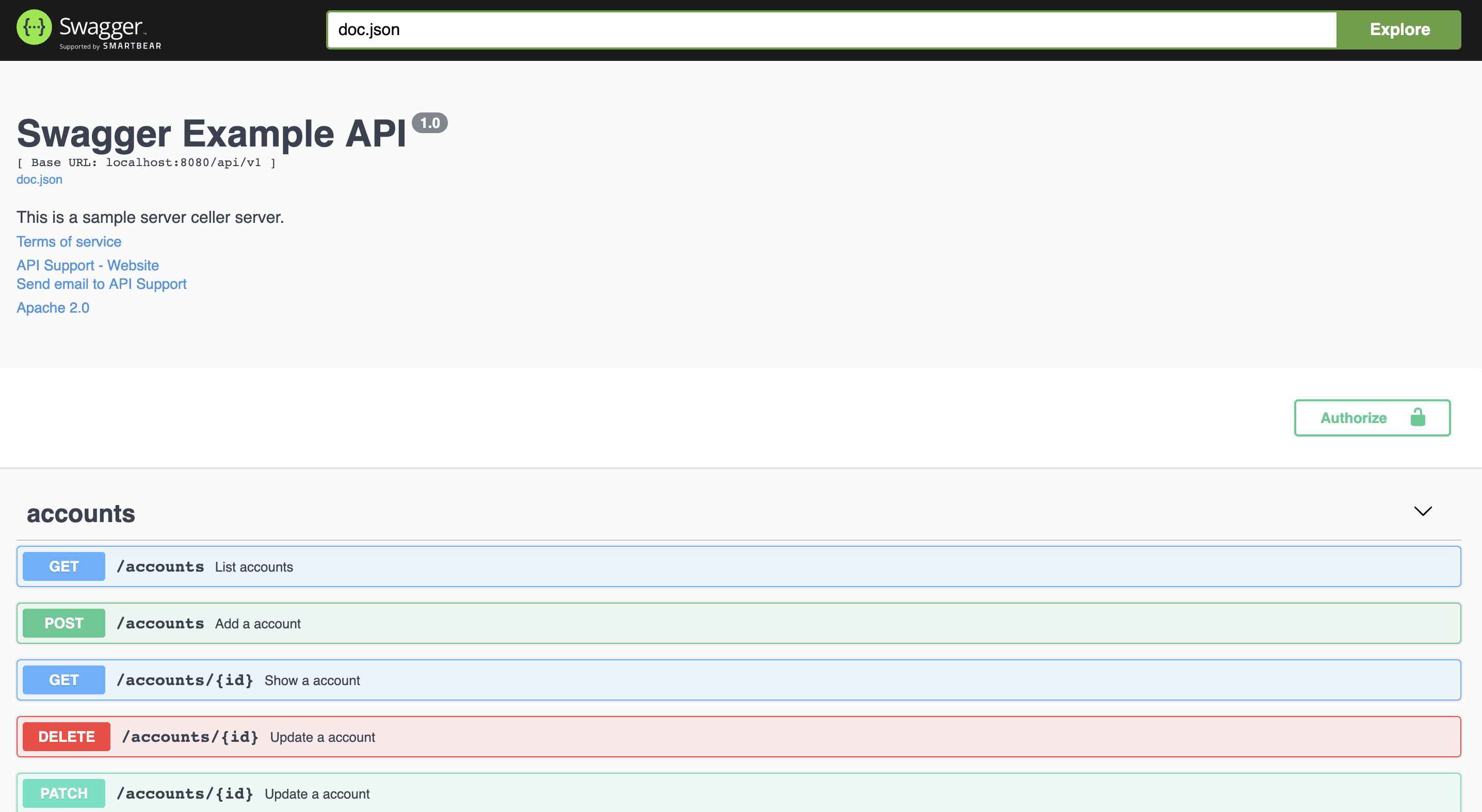

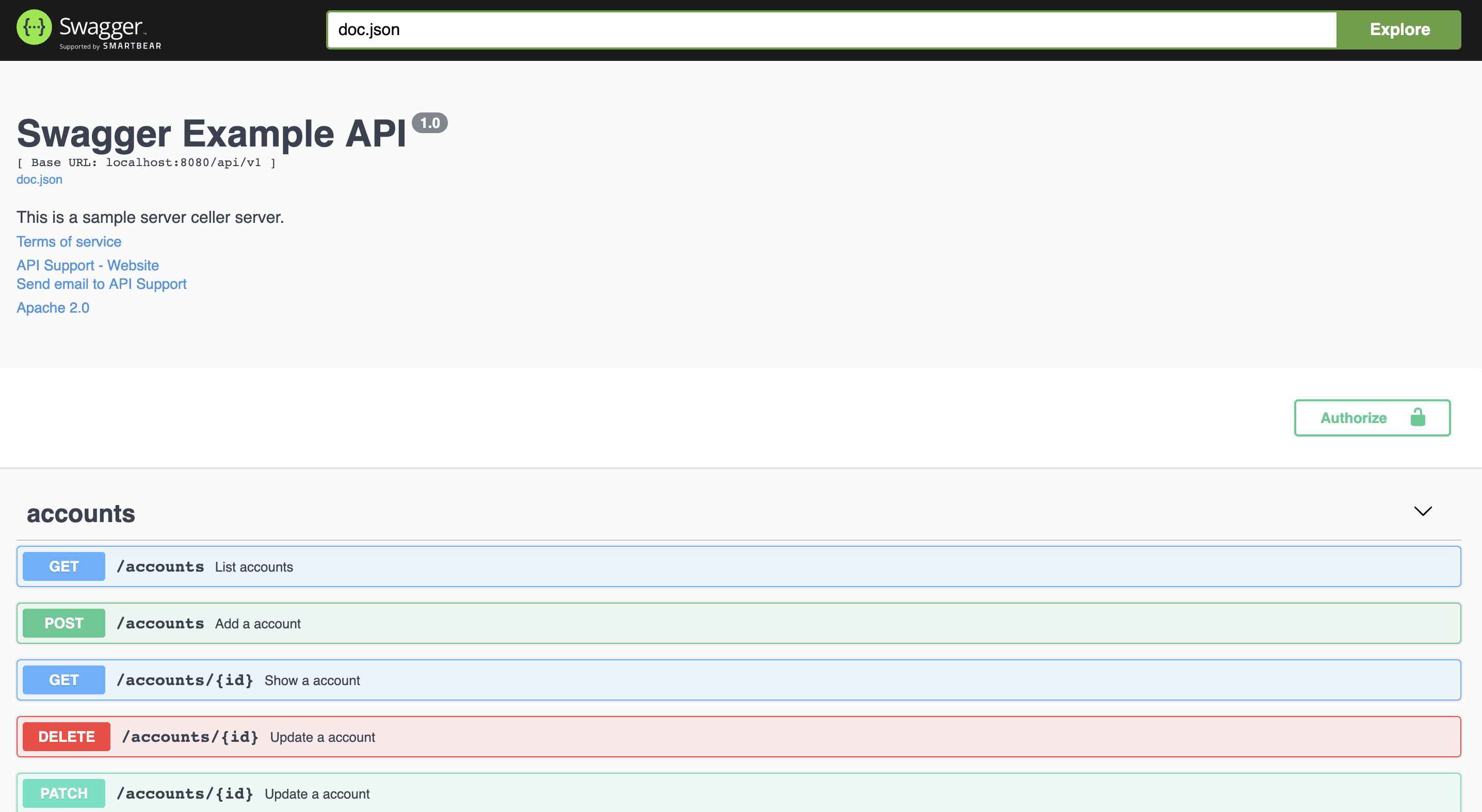

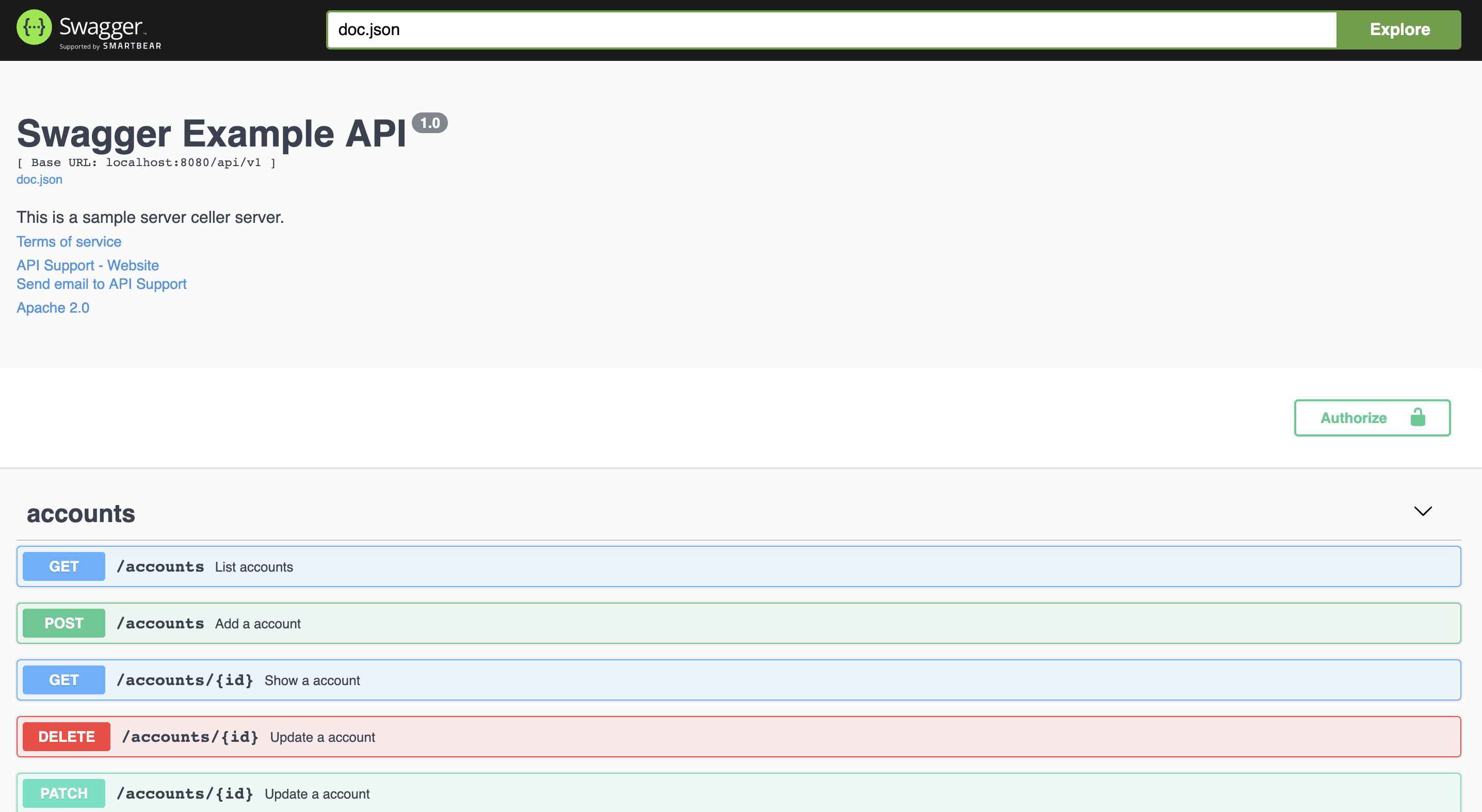

+4. Run your app, and browse to http://localhost:8080/swagger/index.html. You will see Swagger 2.0 Api documents as shown below:

+

+

+

+## The swag formatter

+

+The Swag Comments can be automatically formatted, just like 'go fmt'.

+Find the result of formatting [here](https://github.com/swaggo/swag/tree/master/example/celler).

+

+Usage:

+```shell

+swag fmt

+```

+

+Exclude folder:

+```shell

+swag fmt -d ./ --exclude ./internal

+```

+

+When using `swag fmt`, you need to ensure that you have a doc comment for the function to ensure correct formatting.

+This is due to `swag fmt` indenting swag comments with tabs, which is only allowed *after* a standard doc comment.

+

+For example, use

+

+```go

+// ListAccounts lists all existing accounts

+//

+// @Summary List accounts

+// @Description get accounts

+// @Tags accounts

+// @Accept json

+// @Produce json

+// @Param q query string false "name search by q" Format(email)

+// @Success 200 {array} model.Account

+// @Failure 400 {object} httputil.HTTPError

+// @Failure 404 {object} httputil.HTTPError

+// @Failure 500 {object} httputil.HTTPError

+// @Router /accounts [get]

+func (c *Controller) ListAccounts(ctx *gin.Context) {

+```

+

+## Implementation Status

+

+[Swagger 2.0 document](https://swagger.io/docs/specification/2-0/basic-structure/)

+

+- [x] Basic Structure

+- [x] API Host and Base Path

+- [x] Paths and Operations

+- [x] Describing Parameters

+- [x] Describing Request Body

+- [x] Describing Responses

+- [x] MIME Types

+- [x] Authentication

+ - [x] Basic Authentication

+ - [x] API Keys

+- [x] Adding Examples

+- [x] File Upload

+- [x] Enums

+- [x] Grouping Operations With Tags

+- [ ] Swagger Extensions

+

+# Declarative Comments Format

+

+## General API Info

+

+**Example**

+[celler/main.go](https://github.com/swaggo/swag/blob/master/example/celler/main.go)

+

+| annotation | description | example |

+|-------------|--------------------------------------------|---------------------------------|

+| title | **Required.** The title of the application.| // @title Swagger Example API |

+| version | **Required.** Provides the version of the application API.| // @version 1.0 |

+| description | A short description of the application. |// @description This is a sample server celler server. |

+| tag.name | Name of a tag.| // @tag.name This is the name of the tag |

+| tag.description | Description of the tag | // @tag.description Cool Description |

+| tag.docs.url | Url of the external Documentation of the tag | // @tag.docs.url https://example.com|

+| tag.docs.description | Description of the external Documentation of the tag| // @tag.docs.description Best example documentation |

+| termsOfService | The Terms of Service for the API.| // @termsOfService http://swagger.io/terms/ |

+| contact.name | The contact information for the exposed API.| // @contact.name API Support |

+| contact.url | The URL pointing to the contact information. MUST be in the format of a URL. | // @contact.url http://www.swagger.io/support|

+| contact.email| The email address of the contact person/organization. MUST be in the format of an email address.| // @contact.email support@swagger.io |

+| license.name | **Required.** The license name used for the API.|// @license.name Apache 2.0|

+| license.url | A URL to the license used for the API. MUST be in the format of a URL. | // @license.url http://www.apache.org/licenses/LICENSE-2.0.html |

+| host | The host (name or ip) serving the API. | // @host localhost:8080 |

+| BasePath | The base path on which the API is served. | // @BasePath /api/v1 |

+| accept | A list of MIME types the APIs can consume. Note that Accept only affects operations with a request body, such as POST, PUT and PATCH. Value MUST be as described under [Mime Types](#mime-types). | // @accept json |

+| produce | A list of MIME types the APIs can produce. Value MUST be as described under [Mime Types](#mime-types). | // @produce json |

+| query.collection.format | The default collection(array) param format in query,enums:csv,multi,pipes,tsv,ssv. If not set, csv is the default.| // @query.collection.format multi

+| schemes | The transfer protocol for the operation that separated by spaces. | // @schemes http https |

+| externalDocs.description | Description of the external document. | // @externalDocs.description OpenAPI |

+| externalDocs.url | URL of the external document. | // @externalDocs.url https://swagger.io/resources/open-api/ |

+| x-name | The extension key, must be start by x- and take only json value | // @x-example-key {"key": "value"} |

+

+### Using markdown descriptions

+When a short string in your documentation is insufficient, or you need images, code examples and things like that you may want to use markdown descriptions. In order to use markdown descriptions use the following annotations.

+

+

+| annotation | description | example |

+|-------------|--------------------------------------------|---------------------------------|

+| title | **Required.** The title of the application.| // @title Swagger Example API |

+| version | **Required.** Provides the version of the application API.| // @version 1.0 |

+| description.markdown | A short description of the application. Parsed from the api.md file. This is an alternative to @description |// @description.markdown No value needed, this parses the description from api.md |

+| tag.name | Name of a tag.| // @tag.name This is the name of the tag |

+| tag.description.markdown | Description of the tag this is an alternative to tag.description. The description will be read from a file named like tagname.md | // @tag.description.markdown |

+

+

+## API Operation

+

+**Example**

+[celler/controller](https://github.com/swaggo/swag/tree/master/example/celler/controller)

+

+

+| annotation | description |

+|----------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| description | A verbose explanation of the operation behavior. |

+| description.markdown | A short description of the application. The description will be read from a file. E.g. `@description.markdown details` will load `details.md` | // @description.file endpoint.description.markdown |

+| id | A unique string used to identify the operation. Must be unique among all API operations. |

+| tags | A list of tags to each API operation that separated by commas. |

+| summary | A short summary of what the operation does. |

+| accept | A list of MIME types the APIs can consume. Note that Accept only affects operations with a request body, such as POST, PUT and PATCH. Value MUST be as described under [Mime Types](#mime-types). |

+| produce | A list of MIME types the APIs can produce. Value MUST be as described under [Mime Types](#mime-types). |

+| param | Parameters that separated by spaces. `param name`,`param type`,`data type`,`is mandatory?`,`comment` `attribute(optional)` |

+| security | [Security](#security) to each API operation. |

+| success | Success response that separated by spaces. `return code or default`,`{param type}`,`data type`,`comment` |

+| failure | Failure response that separated by spaces. `return code or default`,`{param type}`,`data type`,`comment` |

+| response | As same as `success` and `failure` |

+| header | Header in response that separated by spaces. `return code`,`{param type}`,`data type`,`comment` |

+| router | Path definition that separated by spaces. `path`,`[httpMethod]` |

+| deprecatedrouter | As same as router, but deprecated. |

+| x-name | The extension key, must be start by x- and take only json value. |

+| x-codeSample | Optional Markdown usage. take `file` as parameter. This will then search for a file named like the summary in the given folder. |

+| deprecated | Mark endpoint as deprecated. |

+

+

+

+## Mime Types

+

+`swag` accepts all MIME Types which are in the correct format, that is, match `*/*`.

+Besides that, `swag` also accepts aliases for some MIME Types as follows:

+

+| Alias | MIME Type |

+|-----------------------|-----------------------------------|

+| json | application/json |

+| xml | text/xml |

+| plain | text/plain |

+| html | text/html |

+| mpfd | multipart/form-data |

+| x-www-form-urlencoded | application/x-www-form-urlencoded |

+| json-api | application/vnd.api+json |

+| json-stream | application/x-json-stream |

+| octet-stream | application/octet-stream |

+| png | image/png |

+| jpeg | image/jpeg |

+| gif | image/gif |

+

+

+

+## Param Type

+

+- query

+- path

+- header

+- body

+- formData

+

+## Data Type

+

+- string (string)

+- integer (int, uint, uint32, uint64)

+- number (float32)

+- boolean (bool)

+- file (param data type when uploading)

+- user defined struct

+

+## Security

+| annotation | description | parameters | example |

+|------------|-------------|------------|---------|

+| securitydefinitions.basic | [Basic](https://swagger.io/docs/specification/2-0/authentication/basic-authentication/) auth. | | // @securityDefinitions.basic BasicAuth |

+| securitydefinitions.apikey | [API key](https://swagger.io/docs/specification/2-0/authentication/api-keys/) auth. | in, name, description | // @securityDefinitions.apikey ApiKeyAuth |

+| securitydefinitions.oauth2.application | [OAuth2 application](https://swagger.io/docs/specification/authentication/oauth2/) auth. | tokenUrl, scope, description | // @securitydefinitions.oauth2.application OAuth2Application |

+| securitydefinitions.oauth2.implicit | [OAuth2 implicit](https://swagger.io/docs/specification/authentication/oauth2/) auth. | authorizationUrl, scope, description | // @securitydefinitions.oauth2.implicit OAuth2Implicit |

+| securitydefinitions.oauth2.password | [OAuth2 password](https://swagger.io/docs/specification/authentication/oauth2/) auth. | tokenUrl, scope, description | // @securitydefinitions.oauth2.password OAuth2Password |

+| securitydefinitions.oauth2.accessCode | [OAuth2 access code](https://swagger.io/docs/specification/authentication/oauth2/) auth. | tokenUrl, authorizationUrl, scope, description | // @securitydefinitions.oauth2.accessCode OAuth2AccessCode |

+

+

+| parameters annotation | example |

+|---------------------------------|-------------------------------------------------------------------------|

+| in | // @in header |

+| name | // @name Authorization |

+| tokenUrl | // @tokenUrl https://example.com/oauth/token |

+| authorizationurl | // @authorizationurl https://example.com/oauth/authorize |

+| scope.hoge | // @scope.write Grants write access |

+| description | // @description OAuth protects our entity endpoints |

+

+## Attribute

+

+```go

+// @Param enumstring query string false "string enums" Enums(A, B, C)

+// @Param enumint query int false "int enums" Enums(1, 2, 3)

+// @Param enumnumber query number false "int enums" Enums(1.1, 1.2, 1.3)

+// @Param string query string false "string valid" minlength(5) maxlength(10)

+// @Param int query int false "int valid" minimum(1) maximum(10)

+// @Param default query string false "string default" default(A)

+// @Param example query string false "string example" example(string)

+// @Param collection query []string false "string collection" collectionFormat(multi)

+// @Param extensions query []string false "string collection" extensions(x-example=test,x-nullable)

+```

+

+It also works for the struct fields:

+

+```go

+type Foo struct {

+ Bar string `minLength:"4" maxLength:"16" example:"random string"`

+ Baz int `minimum:"10" maximum:"20" default:"15"`

+ Qux []string `enums:"foo,bar,baz"`

+}

+```

+

+### Available

+

+Field Name | Type | Description

+---|:---:|---

+validate | `string` | Determines the validation for the parameter. Possible values are: `required,optional`.

+default | * | Declares the value of the parameter that the server will use if none is provided, for example a "count" to control the number of results per page might default to 100 if not supplied by the client in the request. (Note: "default" has no meaning for required parameters.) See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-6.2. Unlike JSON Schema this value MUST conform to the defined [`type`](#parameterType) for this parameter.

+maximum | `number` | See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-5.1.2.

+minimum | `number` | See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-5.1.3.

+multipleOf | `number` | See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-5.1.1.

+maxLength | `integer` | See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-5.2.1.

+minLength | `integer` | See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-5.2.2.

+enums | [\*] | See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-5.5.1.

+format | `string` | The extending format for the previously mentioned [`type`](#parameterType). See [Data Type Formats](https://swagger.io/specification/v2/#dataTypeFormat) for further details.

+collectionFormat | `string` |Determines the format of the array if type array is used. Possible values are:

+

+[](https://github.com/features/actions)

+[](https://codecov.io/gh/swaggo/swag)

+[](https://goreportcard.com/report/github.com/swaggo/swag)

+[](https://codebeat.co/projects/github-com-swaggo-swag-master)

+[](https://godoc.org/github.com/swaggo/swag)

+[](#backers)

+[](#sponsors) [](https://app.fossa.io/projects/git%2Bgithub.com%2Fswaggo%2Fswag?ref=badge_shield)

+[](https://github.com/swaggo/swag/releases)

+

+

+Swag converts Go annotations to Swagger Documentation 2.0. We've created a variety of plugins for popular [Go web frameworks](#supported-web-frameworks). This allows you to quickly integrate with an existing Go project (using Swagger UI).

+

+## Contents

+ - [Getting started](#getting-started)

+ - [Supported Web Frameworks](#supported-web-frameworks)

+ - [How to use it with Gin](#how-to-use-it-with-gin)

+ - [The swag formatter](#the-swag-formatter)

+ - [Implementation Status](#implementation-status)

+ - [Declarative Comments Format](#declarative-comments-format)

+ - [General API Info](#general-api-info)

+ - [API Operation](#api-operation)

+ - [Security](#security)

+ - [Examples](#examples)

+ - [Descriptions over multiple lines](#descriptions-over-multiple-lines)

+ - [User defined structure with an array type](#user-defined-structure-with-an-array-type)

+ - [Function scoped struct declaration](#function-scoped-struct-declaration)

+ - [Model composition in response](#model-composition-in-response)

+ - [Add a headers in response](#add-a-headers-in-response)

+ - [Use multiple path params](#use-multiple-path-params)

+ - [Example value of struct](#example-value-of-struct)

+ - [SchemaExample of body](#schemaexample-of-body)

+ - [Description of struct](#description-of-struct)

+ - [Use swaggertype tag to supported custom type](#use-swaggertype-tag-to-supported-custom-type)

+ - [Use global overrides to support a custom type](#use-global-overrides-to-support-a-custom-type)

+ - [Use swaggerignore tag to exclude a field](#use-swaggerignore-tag-to-exclude-a-field)

+ - [Add extension info to struct field](#add-extension-info-to-struct-field)

+ - [Rename model to display](#rename-model-to-display)

+ - [How to use security annotations](#how-to-use-security-annotations)

+ - [Add a description for enum items](#add-a-description-for-enum-items)

+ - [Generate only specific docs file types](#generate-only-specific-docs-file-types)

+ - [How to use Go generic types](#how-to-use-generics)

+- [About the Project](#about-the-project)

+

+## Getting started

+

+1. Add comments to your API source code, See [Declarative Comments Format](#declarative-comments-format).

+

+2. Install swag by using:

+```sh

+go install github.com/swaggo/swag/cmd/swag@latest

+```

+To build from source you need [Go](https://golang.org/dl/) (1.18 or newer).

+

+Alternatively you can run the docker image:

+```sh

+docker run --rm -v $(pwd):/code ghcr.io/swaggo/swag:latest

+```

+

+Or download a pre-compiled binary from the [release page](https://github.com/swaggo/swag/releases).

+

+3. Run `swag init` in the project's root folder which contains the `main.go` file. This will parse your comments and generate the required files (`docs` folder and `docs/docs.go`).

+```sh

+swag init

+```

+

+ Make sure to import the generated `docs/docs.go` so that your specific configuration gets `init`'ed. If your General API annotations do not live in `main.go`, you can let swag know with `-g` flag.

+ ```go

+ import _ "example-module-name/docs"

+ ```

+ ```sh

+ swag init -g http/api.go

+ ```

+

+4. (optional) Use `swag fmt` format the SWAG comment. (Please upgrade to the latest version)

+

+ ```sh

+ swag fmt

+ ```

+

+## swag cli

+

+```sh

+swag init -h

+NAME:

+ swag init - Create docs.go

+

+USAGE:

+ swag init [command options] [arguments...]

+

+OPTIONS:

+ --quiet, -q Make the logger quiet. (default: false)

+ --generalInfo value, -g value Go file path in which 'swagger general API Info' is written (default: "main.go")

+ --dir value, -d value Directories you want to parse,comma separated and general-info file must be in the first one (default: "./")

+ --exclude value Exclude directories and files when searching, comma separated

+ --propertyStrategy value, -p value Property Naming Strategy like snakecase,camelcase,pascalcase (default: "camelcase")

+ --output value, -o value Output directory for all the generated files(swagger.json, swagger.yaml and docs.go) (default: "./docs")

+ --outputTypes value, --ot value Output types of generated files (docs.go, swagger.json, swagger.yaml) like go,json,yaml (default: "go,json,yaml")

+ --parseVendor Parse go files in 'vendor' folder, disabled by default (default: false)

+ --parseDependency, --pd Parse go files inside dependency folder, disabled by default (default: false)

+ --markdownFiles value, --md value Parse folder containing markdown files to use as description, disabled by default

+ --codeExampleFiles value, --cef value Parse folder containing code example files to use for the x-codeSamples extension, disabled by default

+ --parseInternal Parse go files in internal packages, disabled by default (default: false)

+ --generatedTime Generate timestamp at the top of docs.go, disabled by default (default: false)

+ --parseDepth value Dependency parse depth (default: 100)

+ --requiredByDefault Set validation required for all fields by default (default: false)

+ --instanceName value This parameter can be used to name different swagger document instances. It is optional.

+ --overridesFile value File to read global type overrides from. (default: ".swaggo")

+ --parseGoList Parse dependency via 'go list' (default: true)

+ --tags value, -t value A comma-separated list of tags to filter the APIs for which the documentation is generated.Special case if the tag is prefixed with the '!' character then the APIs with that tag will be excluded

+ --templateDelims value, --td value Provide custom delimeters for Go template generation. The format is leftDelim,rightDelim. For example: "[[,]]"

+ --collectionFormat value, --cf value Set default collection format (default: "csv")

+ --state value Initial state for the state machine (default: ""), @HostState in root file, @State in other files

+ --help, -h show help (default: false)

+```

+

+```bash

+swag fmt -h

+NAME:

+ swag fmt - format swag comments

+

+USAGE:

+ swag fmt [command options] [arguments...]

+

+OPTIONS:

+ --dir value, -d value Directories you want to parse,comma separated and general-info file must be in the first one (default: "./")

+ --exclude value Exclude directories and files when searching, comma separated

+ --generalInfo value, -g value Go file path in which 'swagger general API Info' is written (default: "main.go")

+ --help, -h show help (default: false)

+

+```

+

+## Supported Web Frameworks

+

+- [gin](http://github.com/swaggo/gin-swagger)

+- [echo](http://github.com/swaggo/echo-swagger)

+- [buffalo](https://github.com/swaggo/buffalo-swagger)

+- [net/http](https://github.com/swaggo/http-swagger)

+- [gorilla/mux](https://github.com/swaggo/http-swagger)

+- [go-chi/chi](https://github.com/swaggo/http-swagger)

+- [flamingo](https://github.com/i-love-flamingo/swagger)

+- [fiber](https://github.com/gofiber/swagger)

+- [atreugo](https://github.com/Nerzal/atreugo-swagger)

+- [hertz](https://github.com/hertz-contrib/swagger)

+

+## How to use it with Gin

+

+Find the example source code [here](https://github.com/swaggo/swag/tree/master/example/celler).

+

+Finish the steps in [Getting started](#getting-started)

+1. After using `swag init` to generate Swagger 2.0 docs, import the following packages:

+```go

+import "github.com/swaggo/gin-swagger" // gin-swagger middleware

+import "github.com/swaggo/files" // swagger embed files

+```

+

+2. Add [General API](#general-api-info) annotations in `main.go` code:

+

+```go

+// @title Swagger Example API

+// @version 1.0

+// @description This is a sample server celler server.

+// @termsOfService http://swagger.io/terms/

+

+// @contact.name API Support

+// @contact.url http://www.swagger.io/support

+// @contact.email support@swagger.io

+

+// @license.name Apache 2.0

+// @license.url http://www.apache.org/licenses/LICENSE-2.0.html

+

+// @host localhost:8080

+// @BasePath /api/v1

+

+// @securityDefinitions.basic BasicAuth

+

+// @externalDocs.description OpenAPI

+// @externalDocs.url https://swagger.io/resources/open-api/

+func main() {

+ r := gin.Default()

+

+ c := controller.NewController()

+

+ v1 := r.Group("/api/v1")

+ {

+ accounts := v1.Group("/accounts")

+ {

+ accounts.GET(":id", c.ShowAccount)

+ accounts.GET("", c.ListAccounts)

+ accounts.POST("", c.AddAccount)

+ accounts.DELETE(":id", c.DeleteAccount)

+ accounts.PATCH(":id", c.UpdateAccount)

+ accounts.POST(":id/images", c.UploadAccountImage)

+ }

+ //...

+ }

+ r.GET("/swagger/*any", ginSwagger.WrapHandler(swaggerFiles.Handler))

+ r.Run(":8080")

+}

+//...

+```

+

+Additionally some general API info can be set dynamically. The generated code package `docs` exports `SwaggerInfo` variable which we can use to set the title, description, version, host and base path programmatically. Example using Gin:

+

+```go

+package main

+

+import (

+ "github.com/gin-gonic/gin"

+ "github.com/swaggo/files"

+ "github.com/swaggo/gin-swagger"

+

+ "./docs" // docs is generated by Swag CLI, you have to import it.

+)

+

+// @contact.name API Support

+// @contact.url http://www.swagger.io/support

+// @contact.email support@swagger.io

+

+// @license.name Apache 2.0

+// @license.url http://www.apache.org/licenses/LICENSE-2.0.html

+func main() {

+

+ // programmatically set swagger info

+ docs.SwaggerInfo.Title = "Swagger Example API"

+ docs.SwaggerInfo.Description = "This is a sample server Petstore server."

+ docs.SwaggerInfo.Version = "1.0"

+ docs.SwaggerInfo.Host = "petstore.swagger.io"

+ docs.SwaggerInfo.BasePath = "/v2"

+ docs.SwaggerInfo.Schemes = []string{"http", "https"}

+

+ r := gin.New()

+

+ // use ginSwagger middleware to serve the API docs

+ r.GET("/swagger/*any", ginSwagger.WrapHandler(swaggerFiles.Handler))

+

+ r.Run()

+}

+```

+

+3. Add [API Operation](#api-operation) annotations in `controller` code

+

+``` go

+package controller

+

+import (

+ "fmt"

+ "net/http"

+ "strconv"

+

+ "github.com/gin-gonic/gin"

+ "github.com/swaggo/swag/example/celler/httputil"

+ "github.com/swaggo/swag/example/celler/model"

+)

+

+// ShowAccount godoc

+// @Summary Show an account

+// @Description get string by ID

+// @Tags accounts

+// @Accept json

+// @Produce json

+// @Param id path int true "Account ID"

+// @Success 200 {object} model.Account

+// @Failure 400 {object} httputil.HTTPError

+// @Failure 404 {object} httputil.HTTPError

+// @Failure 500 {object} httputil.HTTPError

+// @Router /accounts/{id} [get]

+func (c *Controller) ShowAccount(ctx *gin.Context) {

+ id := ctx.Param("id")

+ aid, err := strconv.Atoi(id)

+ if err != nil {

+ httputil.NewError(ctx, http.StatusBadRequest, err)

+ return

+ }

+ account, err := model.AccountOne(aid)

+ if err != nil {

+ httputil.NewError(ctx, http.StatusNotFound, err)

+ return

+ }

+ ctx.JSON(http.StatusOK, account)

+}

+

+// ListAccounts godoc

+// @Summary List accounts

+// @Description get accounts

+// @Tags accounts

+// @Accept json

+// @Produce json

+// @Param q query string false "name search by q" Format(email)

+// @Success 200 {array} model.Account

+// @Failure 400 {object} httputil.HTTPError

+// @Failure 404 {object} httputil.HTTPError

+// @Failure 500 {object} httputil.HTTPError

+// @Router /accounts [get]

+func (c *Controller) ListAccounts(ctx *gin.Context) {

+ q := ctx.Request.URL.Query().Get("q")

+ accounts, err := model.AccountsAll(q)

+ if err != nil {

+ httputil.NewError(ctx, http.StatusNotFound, err)

+ return

+ }

+ ctx.JSON(http.StatusOK, accounts)

+}

+//...

+```

+

+```console

+swag init

+```

+

+4. Run your app, and browse to http://localhost:8080/swagger/index.html. You will see Swagger 2.0 Api documents as shown below:

+

+

+

+## The swag formatter

+

+The Swag Comments can be automatically formatted, just like 'go fmt'.

+Find the result of formatting [here](https://github.com/swaggo/swag/tree/master/example/celler).

+

+Usage:

+```shell

+swag fmt

+```

+

+Exclude folder:

+```shell

+swag fmt -d ./ --exclude ./internal

+```

+

+When using `swag fmt`, you need to ensure that you have a doc comment for the function to ensure correct formatting.

+This is due to `swag fmt` indenting swag comments with tabs, which is only allowed *after* a standard doc comment.

+

+For example, use

+

+```go

+// ListAccounts lists all existing accounts

+//

+// @Summary List accounts

+// @Description get accounts

+// @Tags accounts

+// @Accept json

+// @Produce json

+// @Param q query string false "name search by q" Format(email)

+// @Success 200 {array} model.Account

+// @Failure 400 {object} httputil.HTTPError

+// @Failure 404 {object} httputil.HTTPError

+// @Failure 500 {object} httputil.HTTPError

+// @Router /accounts [get]

+func (c *Controller) ListAccounts(ctx *gin.Context) {

+```

+

+## Implementation Status

+

+[Swagger 2.0 document](https://swagger.io/docs/specification/2-0/basic-structure/)

+

+- [x] Basic Structure

+- [x] API Host and Base Path

+- [x] Paths and Operations

+- [x] Describing Parameters

+- [x] Describing Request Body

+- [x] Describing Responses

+- [x] MIME Types

+- [x] Authentication

+ - [x] Basic Authentication

+ - [x] API Keys

+- [x] Adding Examples

+- [x] File Upload

+- [x] Enums

+- [x] Grouping Operations With Tags

+- [ ] Swagger Extensions

+

+# Declarative Comments Format

+

+## General API Info

+

+**Example**

+[celler/main.go](https://github.com/swaggo/swag/blob/master/example/celler/main.go)

+

+| annotation | description | example |

+|-------------|--------------------------------------------|---------------------------------|

+| title | **Required.** The title of the application.| // @title Swagger Example API |

+| version | **Required.** Provides the version of the application API.| // @version 1.0 |

+| description | A short description of the application. |// @description This is a sample server celler server. |

+| tag.name | Name of a tag.| // @tag.name This is the name of the tag |

+| tag.description | Description of the tag | // @tag.description Cool Description |

+| tag.docs.url | Url of the external Documentation of the tag | // @tag.docs.url https://example.com|

+| tag.docs.description | Description of the external Documentation of the tag| // @tag.docs.description Best example documentation |

+| termsOfService | The Terms of Service for the API.| // @termsOfService http://swagger.io/terms/ |

+| contact.name | The contact information for the exposed API.| // @contact.name API Support |

+| contact.url | The URL pointing to the contact information. MUST be in the format of a URL. | // @contact.url http://www.swagger.io/support|

+| contact.email| The email address of the contact person/organization. MUST be in the format of an email address.| // @contact.email support@swagger.io |

+| license.name | **Required.** The license name used for the API.|// @license.name Apache 2.0|

+| license.url | A URL to the license used for the API. MUST be in the format of a URL. | // @license.url http://www.apache.org/licenses/LICENSE-2.0.html |

+| host | The host (name or ip) serving the API. | // @host localhost:8080 |

+| BasePath | The base path on which the API is served. | // @BasePath /api/v1 |

+| accept | A list of MIME types the APIs can consume. Note that Accept only affects operations with a request body, such as POST, PUT and PATCH. Value MUST be as described under [Mime Types](#mime-types). | // @accept json |

+| produce | A list of MIME types the APIs can produce. Value MUST be as described under [Mime Types](#mime-types). | // @produce json |

+| query.collection.format | The default collection(array) param format in query,enums:csv,multi,pipes,tsv,ssv. If not set, csv is the default.| // @query.collection.format multi

+| schemes | The transfer protocol for the operation that separated by spaces. | // @schemes http https |

+| externalDocs.description | Description of the external document. | // @externalDocs.description OpenAPI |

+| externalDocs.url | URL of the external document. | // @externalDocs.url https://swagger.io/resources/open-api/ |

+| x-name | The extension key, must be start by x- and take only json value | // @x-example-key {"key": "value"} |

+

+### Using markdown descriptions

+When a short string in your documentation is insufficient, or you need images, code examples and things like that you may want to use markdown descriptions. In order to use markdown descriptions use the following annotations.

+

+

+| annotation | description | example |

+|-------------|--------------------------------------------|---------------------------------|

+| title | **Required.** The title of the application.| // @title Swagger Example API |

+| version | **Required.** Provides the version of the application API.| // @version 1.0 |

+| description.markdown | A short description of the application. Parsed from the api.md file. This is an alternative to @description |// @description.markdown No value needed, this parses the description from api.md |

+| tag.name | Name of a tag.| // @tag.name This is the name of the tag |

+| tag.description.markdown | Description of the tag this is an alternative to tag.description. The description will be read from a file named like tagname.md | // @tag.description.markdown |

+

+

+## API Operation

+

+**Example**

+[celler/controller](https://github.com/swaggo/swag/tree/master/example/celler/controller)

+

+

+| annotation | description |

+|----------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| description | A verbose explanation of the operation behavior. |

+| description.markdown | A short description of the application. The description will be read from a file. E.g. `@description.markdown details` will load `details.md` | // @description.file endpoint.description.markdown |

+| id | A unique string used to identify the operation. Must be unique among all API operations. |

+| tags | A list of tags to each API operation that separated by commas. |

+| summary | A short summary of what the operation does. |

+| accept | A list of MIME types the APIs can consume. Note that Accept only affects operations with a request body, such as POST, PUT and PATCH. Value MUST be as described under [Mime Types](#mime-types). |

+| produce | A list of MIME types the APIs can produce. Value MUST be as described under [Mime Types](#mime-types). |

+| param | Parameters that separated by spaces. `param name`,`param type`,`data type`,`is mandatory?`,`comment` `attribute(optional)` |

+| security | [Security](#security) to each API operation. |

+| success | Success response that separated by spaces. `return code or default`,`{param type}`,`data type`,`comment` |

+| failure | Failure response that separated by spaces. `return code or default`,`{param type}`,`data type`,`comment` |

+| response | As same as `success` and `failure` |

+| header | Header in response that separated by spaces. `return code`,`{param type}`,`data type`,`comment` |

+| router | Path definition that separated by spaces. `path`,`[httpMethod]` |

+| deprecatedrouter | As same as router, but deprecated. |

+| x-name | The extension key, must be start by x- and take only json value. |

+| x-codeSample | Optional Markdown usage. take `file` as parameter. This will then search for a file named like the summary in the given folder. |

+| deprecated | Mark endpoint as deprecated. |

+

+

+

+## Mime Types

+

+`swag` accepts all MIME Types which are in the correct format, that is, match `*/*`.

+Besides that, `swag` also accepts aliases for some MIME Types as follows:

+

+| Alias | MIME Type |

+|-----------------------|-----------------------------------|

+| json | application/json |

+| xml | text/xml |

+| plain | text/plain |

+| html | text/html |

+| mpfd | multipart/form-data |

+| x-www-form-urlencoded | application/x-www-form-urlencoded |

+| json-api | application/vnd.api+json |

+| json-stream | application/x-json-stream |

+| octet-stream | application/octet-stream |

+| png | image/png |

+| jpeg | image/jpeg |

+| gif | image/gif |

+

+

+

+## Param Type

+

+- query

+- path

+- header

+- body

+- formData

+

+## Data Type

+

+- string (string)

+- integer (int, uint, uint32, uint64)

+- number (float32)

+- boolean (bool)

+- file (param data type when uploading)

+- user defined struct

+

+## Security

+| annotation | description | parameters | example |

+|------------|-------------|------------|---------|

+| securitydefinitions.basic | [Basic](https://swagger.io/docs/specification/2-0/authentication/basic-authentication/) auth. | | // @securityDefinitions.basic BasicAuth |

+| securitydefinitions.apikey | [API key](https://swagger.io/docs/specification/2-0/authentication/api-keys/) auth. | in, name, description | // @securityDefinitions.apikey ApiKeyAuth |

+| securitydefinitions.oauth2.application | [OAuth2 application](https://swagger.io/docs/specification/authentication/oauth2/) auth. | tokenUrl, scope, description | // @securitydefinitions.oauth2.application OAuth2Application |

+| securitydefinitions.oauth2.implicit | [OAuth2 implicit](https://swagger.io/docs/specification/authentication/oauth2/) auth. | authorizationUrl, scope, description | // @securitydefinitions.oauth2.implicit OAuth2Implicit |

+| securitydefinitions.oauth2.password | [OAuth2 password](https://swagger.io/docs/specification/authentication/oauth2/) auth. | tokenUrl, scope, description | // @securitydefinitions.oauth2.password OAuth2Password |

+| securitydefinitions.oauth2.accessCode | [OAuth2 access code](https://swagger.io/docs/specification/authentication/oauth2/) auth. | tokenUrl, authorizationUrl, scope, description | // @securitydefinitions.oauth2.accessCode OAuth2AccessCode |

+

+

+| parameters annotation | example |

+|---------------------------------|-------------------------------------------------------------------------|

+| in | // @in header |

+| name | // @name Authorization |

+| tokenUrl | // @tokenUrl https://example.com/oauth/token |

+| authorizationurl | // @authorizationurl https://example.com/oauth/authorize |

+| scope.hoge | // @scope.write Grants write access |

+| description | // @description OAuth protects our entity endpoints |

+

+## Attribute

+

+```go

+// @Param enumstring query string false "string enums" Enums(A, B, C)

+// @Param enumint query int false "int enums" Enums(1, 2, 3)

+// @Param enumnumber query number false "int enums" Enums(1.1, 1.2, 1.3)

+// @Param string query string false "string valid" minlength(5) maxlength(10)

+// @Param int query int false "int valid" minimum(1) maximum(10)

+// @Param default query string false "string default" default(A)

+// @Param example query string false "string example" example(string)

+// @Param collection query []string false "string collection" collectionFormat(multi)

+// @Param extensions query []string false "string collection" extensions(x-example=test,x-nullable)

+```

+

+It also works for the struct fields:

+

+```go

+type Foo struct {

+ Bar string `minLength:"4" maxLength:"16" example:"random string"`

+ Baz int `minimum:"10" maximum:"20" default:"15"`

+ Qux []string `enums:"foo,bar,baz"`

+}

+```

+

+### Available

+

+Field Name | Type | Description

+---|:---:|---

+validate | `string` | Determines the validation for the parameter. Possible values are: `required,optional`.

+default | * | Declares the value of the parameter that the server will use if none is provided, for example a "count" to control the number of results per page might default to 100 if not supplied by the client in the request. (Note: "default" has no meaning for required parameters.) See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-6.2. Unlike JSON Schema this value MUST conform to the defined [`type`](#parameterType) for this parameter.

+maximum | `number` | See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-5.1.2.

+minimum | `number` | See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-5.1.3.

+multipleOf | `number` | See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-5.1.1.

+maxLength | `integer` | See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-5.2.1.

+minLength | `integer` | See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-5.2.2.

+enums | [\*] | See https://tools.ietf.org/html/draft-fge-json-schema-validation-00#section-5.5.1.

+format | `string` | The extending format for the previously mentioned [`type`](#parameterType). See [Data Type Formats](https://swagger.io/specification/v2/#dataTypeFormat) for further details.

+collectionFormat | `string` |Determines the format of the array if type array is used. Possible values are: foo|bar.  +

+[](https://github.com/features/actions)

+[](https://codecov.io/gh/swaggo/swag)

+[](https://goreportcard.com/report/github.com/swaggo/swag)

+[](https://codebeat.co/projects/github-com-swaggo-swag-master)

+[](https://godoc.org/github.com/swaggo/swag)

+[](#backers)

+[](#sponsors) [](https://app.fossa.io/projects/git%2Bgithub.com%2Fswaggo%2Fswag?ref=badge_shield)

+[](https://github.com/swaggo/swag/releases)

+

+Swag converte anotações Go para Documentação Swagger 2.0. Criámos uma variedade de plugins para populares [Go web frameworks](#supported-web-frameworks). Isto permite uma integração rápida com um projecto Go existente (utilizando a Swagger UI).

+

+## Conteúdo

+- [Começando](#começando)

+ - [Estruturas Web Suportadas](#estruturas-web-suportadas)

+ - [Como utilizá-lo com Gin](#como-como-ser-como-gin)

+ - [O formatador de swag](#a-formatação-de-swag)

+ - [Estado de Implementação](#implementação-estado)

+ - [Formato dos comentários declarativos](#formato-dos-comentarios-declarativos)

+ - [Informações Gerais API](#informações-gerais-api)

+ - [Operação API](#api-operacao)

+ - [Segurança](#seguranca)

+ - [Exemplos](#exemplos)

+ - [Descrições em múltiplas linhas](#descricoes-sobre-múltiplas-linhas)

+ - [Estrutura definida pelo utilizador com um tipo de matriz](#-estrutura-definida-pelo-utilizador-com-um-um-tipo)

+ - [Declaração de estruturação de funções](#function-scoped-struct-declaration)

+ - [Composição do modelo em resposta](#model-composição-em-resposta)

+ - [Adicionar um cabeçalho em resposta](#add-a-headers-in-response)

+ - [Utilizar parâmetros de caminhos múltiplos](#use-multiple-path-params)

+ - [Exemplo de valor de estrutura](#exemplo-do-valor-de-estrutura)

+ - [Schema Exemplo do corpo](#schemaexample-of-body)

+ - [Descrição da estrutura](#descrição-da-estrutura)

+ - [Usar etiqueta do tipo swaggertype para suportar o tipo personalizado](#use-swaggertype-tag-to-supported-custom-type)

+ - [Utilizar anulações globais para suportar um tipo personalizado](#use-global-overrides-to-support-a-custom-type)

+ - [Use swaggerignore tag para excluir um campo](#use-swaggerignore-tag-to-excluir-um-campo)

+ - [Adicionar informações de extensão ao campo de estruturação](#add-extension-info-to-struct-field)

+ - [Renomear modelo a expor](#renome-modelo-a-exibir)

+ - [Como utilizar as anotações de segurança](#como-utilizar-as-anotações-de-segurança)

+ - [Adicionar uma descrição para enumerar artigos](#add-a-description-for-enum-items)

+ - [Gerar apenas tipos de ficheiros de documentos específicos](#generate-only-specific-docs-file-file-types)

+ - [Como usar tipos genéricos](#como-usar-tipos-genéricos)

+- [Sobre o projecto](#sobre-o-projecto)

+

+## Começando

+

+1. Adicione comentários ao código-fonte da API, consulte [Formato dos comentários declarativos](#declarative-comments-format).

+

+2. Descarregue o swag utilizando:

+```sh

+go install github.com/swaggo/swag/cmd/swag@latest

+```

+Para construir a partir da fonte é necessário [Go](https://golang.org/dl/) (1.18 ou mais recente).

+

+Ou descarregar um binário pré-compilado a partir da [página de lançamento](https://github.com/swaggo/swag/releases).

+

+3. Executar `swag init` na pasta raiz do projecto que contém o ficheiro `main.go`. Isto irá analisar os seus comentários e gerar os ficheiros necessários (pasta `docs` e `docs/docs.go`).

+```sh

+swag init

+```

+

+Certifique-se de importar os `docs/docs.go` gerados para que a sua configuração específica fique "init" ed. Se as suas anotações API gerais não viverem em `main.go`, pode avisar a swag com a bandeira `-g`.

+```sh

+swag init -g http/api.go

+```

+

+4. (opcional) Utilizar o formato `swag fmt` no comentário SWAG. (Por favor, actualizar para a versão mais recente)

+

+```sh

+swag fmt

+```

+

+## swag cli

+

+```sh

+swag init -h

+NOME:

+ swag init - Criar docs.go

+

+UTILIZAÇÃO:

+ swag init [opções de comando] [argumentos...]

+

+OPÇÕES:

+ --quiet, -q Fazer o logger ficar quiet (por padrão: falso)

+ --generalInfo valor, -g valor Go caminho do ficheiro em que 'swagger general API Info' está escrito (por padrão: "main.go")

+ --dir valor, -d valor Os directórios que deseja analisar, separados por vírgulas e de informação geral devem estar no primeiro (por padrão: "./")

+ --exclude valor Excluir directórios e ficheiros ao pesquisar, separados por vírgulas

+ -propertyStrategy da estratégia, -p valor da propriedadeEstratégia de nomeação de propriedades como snakecase,camelcase,pascalcase (por padrão: "camelcase")

+ --output de saída, -o valor directório de saída para todos os ficheiros gerados(swagger.json, swagger.yaml e docs.go) (por padrão: "./docs")

+ --outputTypes valor de saídaTypes, -- valor de saída Tipos de ficheiros gerados (docs.go, swagger.json, swagger.yaml) como go,json,yaml (por padrão: "go,json,yaml")

+ --parseVendor ParseVendor Parse go files na pasta 'vendor', desactivado por padrão (padrão: falso)

+ --parseInternal Parse go ficheiros em pacotes internos, desactivados por padrão (padrão: falso)

+ --generatedTime Gerar timestamp no topo dos docs.go, desactivado por padrão (padrão: falso)

+ --parteDepth value Dependência profundidade parse (por padrão: 100)

+ --templateDelims value, --td value fornecem delimitadores personalizados para a geração de modelos Go. O formato é leftDelim,rightDelim. Por exemplo: "[[,]]"

+ ...

+

+ --help, -h mostrar ajuda (por padrão: falso)

+```

+

+```bash

+swag fmt -h

+NOME:

+ swag fmt - formato swag comentários

+

+UTILIZAÇÃO:

+ swag fmt [opções de comando] [argumentos...]

+

+OPÇÕES:

+ --dir valor, -d valor Os directórios que pretende analisar, separados por vírgulas e de informação geral devem estar no primeiro (por padrão: "./")

+ --excluir valor Excluir directórios e ficheiros ao pesquisar, separados por vírgulas

+ --generalInfo value, -g value Go file path in which 'swagger general API Info' is written (por padrão: "main.go")

+ --ajuda, -h mostrar ajuda (por padrão: falso)

+

+```

+

+## Estruturas Web Suportadas

+

+- [gin](http://github.com/swaggo/gin-swagger)

+- [echo](http://github.com/swaggo/echo-swagger)

+- [buffalo](https://github.com/swaggo/buffalo-swagger)

+- [net/http](https://github.com/swaggo/http-swagger)

+- [gorilla/mux](https://github.com/swaggo/http-swagger)

+- [go-chi/chi](https://github.com/swaggo/http-swagger)

+- [flamingo](https://github.com/i-love-flamingo/swagger)

+- [fiber](https://github.com/gofiber/swagger)

+- [atreugo](https://github.com/Nerzal/atreugo-swagger)

+- [hertz](https://github.com/hertz-contrib/swagger)

+

+## Como utilizá-lo com Gin

+

+Encontrar o código fonte de exemplo [aqui](https://github.com/swaggo/swag/tree/master/example/celler).

+

+1. Depois de utilizar `swag init` para gerar os documentos Swagger 2.0, importar os seguintes pacotes:

+```go

+import "github.com/swaggo/gin-swagger" // gin-swagger middleware

+import "github.com/swaggo/files" // swagger embed files

+```

+

+2. Adicionar [Informações Gerais API](#general-api-info) anotações em código `main.go`:

+

+

+```go

+// @title Swagger Example API

+// @version 1.0

+// @description This is a sample server celler server.

+// @termsOfService http://swagger.io/terms/

+

+// @contact.name API Support

+// @contact.url http://www.swagger.io/support

+// @contact.email support@swagger.io

+

+// @license.name Apache 2.0

+// @license.url http://www.apache.org/licenses/LICENSE-2.0.html

+

+// @host localhost:8080

+// @BasePath /api/v1

+

+// @securityDefinitions.basic BasicAuth

+

+// @externalDocs.description OpenAPI

+// @externalDocs.url https://swagger.io/resources/open-api/

+func main() {

+ r := gin.Default()

+

+ c := controller.NewController()

+

+ v1 := r.Group("/api/v1")

+ {

+ accounts := v1.Group("/accounts")

+ {

+ accounts.GET(":id", c.ShowAccount)

+ accounts.GET("", c.ListAccounts)

+ accounts.POST("", c.AddAccount)

+ accounts.DELETE(":id", c.DeleteAccount)

+ accounts.PATCH(":id", c.UpdateAccount)

+ accounts.POST(":id/images", c.UploadAccountImage)

+ }

+ //...

+ }

+ r.GET("/swagger/*any", ginSwagger.WrapHandler(swaggerFiles.Handler))

+ r.Run(":8080")

+}

+//...

+```

+

+Além disso, algumas informações API gerais podem ser definidas de forma dinâmica. O pacote de código gerado `docs` exporta a variável `SwaggerInfo` que podemos utilizar para definir programticamente o título, descrição, versão, hospedeiro e caminho base. Exemplo utilizando Gin:

+

+```go

+package main

+

+import (

+ "github.com/gin-gonic/gin"

+ "github.com/swaggo/files"

+ "github.com/swaggo/gin-swagger"

+

+ "./docs" // docs is generated by Swag CLI, you have to import it.

+)

+

+// @contact.name API Support

+// @contact.url http://www.swagger.io/support

+// @contact.email support@swagger.io

+

+// @license.name Apache 2.0

+// @license.url http://www.apache.org/licenses/LICENSE-2.0.html

+func main() {

+

+ // programmatically set swagger info

+ docs.SwaggerInfo.Title = "Swagger Example API"

+ docs.SwaggerInfo.Description = "This is a sample server Petstore server."

+ docs.SwaggerInfo.Version = "1.0"

+ docs.SwaggerInfo.Host = "petstore.swagger.io"

+ docs.SwaggerInfo.BasePath = "/v2"

+ docs.SwaggerInfo.Schemes = []string{"http", "https"}

+

+ r := gin.New()

+

+ // use ginSwagger middleware to serve the API docs

+ r.GET("/swagger/*any", ginSwagger.WrapHandler(swaggerFiles.Handler))

+

+ r.Run()

+}

+```

+

+3. Adicionar [Operação API](#api-operacao) anotações em código `controller`

+

+```go

+package controller

+

+import (

+ "fmt"

+ "net/http"

+ "strconv"

+

+ "github.com/gin-gonic/gin"

+ "github.com/swaggo/swag/example/celler/httputil"

+ "github.com/swaggo/swag/example/celler/model"

+)

+

+// ShowAccount godoc

+// @Summary Show an account

+// @Description get string by ID

+// @Tags accounts

+// @Accept json

+// @Produce json

+// @Param id path int true "Account ID"

+// @Success 200 {object} model.Account

+// @Failure 400 {object} httputil.HTTPError

+// @Failure 404 {object} httputil.HTTPError

+// @Failure 500 {object} httputil.HTTPError

+// @Router /accounts/{id} [get]

+func (c *Controller) ShowAccount(ctx *gin.Context) {

+ id := ctx.Param("id")

+ aid, err := strconv.Atoi(id)

+ if err != nil {

+ httputil.NewError(ctx, http.StatusBadRequest, err)

+ return

+ }

+ account, err := model.AccountOne(aid)

+ if err != nil {

+ httputil.NewError(ctx, http.StatusNotFound, err)

+ return

+ }

+ ctx.JSON(http.StatusOK, account)

+}

+

+// ListAccounts godoc

+// @Summary List accounts

+// @Description get accounts

+// @Tags accounts

+// @Accept json

+// @Produce json

+// @Param q query string false "name search by q" Format(email)

+// @Success 200 {array} model.Account

+// @Failure 400 {object} httputil.HTTPError

+// @Failure 404 {object} httputil.HTTPError

+// @Failure 500 {object} httputil.HTTPError

+// @Router /accounts [get]

+func (c *Controller) ListAccounts(ctx *gin.Context) {

+ q := ctx.Request.URL.Query().Get("q")

+ accounts, err := model.AccountsAll(q)

+ if err != nil {

+ httputil.NewError(ctx, http.StatusNotFound, err)

+ return

+ }

+ ctx.JSON(http.StatusOK, accounts)

+}

+//...

+```

+

+```console

+swag init

+```

+

+4. Execute a sua aplicação, e navegue para http://localhost:8080/swagger/index.html. Verá os documentos Swagger 2.0 Api, como mostrado abaixo:

+

+

+

+## O formatador de swag

+

+Os Swag Comments podem ser formatados automaticamente, assim como 'go fmt'.

+Encontre o resultado da formatação [aqui](https://github.com/swaggo/swag/tree/master/example/celler).

+

+Usage:

+```shell

+swag fmt

+```

+

+Exclude folder:

+```shell

+swag fmt -d ./ --exclude ./internal

+```

+

+Ao utilizar `swag fmt`, é necessário assegurar-se de que tem um comentário doc para a função a fim de assegurar uma formatação correcta.

+Isto deve-se ao `swag fmt` que traça comentários swag com separadores, o que só é permitido *após* um comentário doc padrão.

+

+Por exemplo, utilizar

+

+```go

+// ListAccounts lists all existing accounts

+//

+// @Summary List accounts

+// @Description get accounts

+// @Tags accounts

+// @Accept json

+// @Produce json

+// @Param q query string false "name search by q" Format(email)

+// @Success 200 {array} model.Account

+// @Failure 400 {object} httputil.HTTPError

+// @Failure 404 {object} httputil.HTTPError

+// @Failure 500 {object} httputil.HTTPError

+// @Router /accounts [get]

+func (c *Controller) ListAccounts(ctx *gin.Context) {

+```

+

+## Estado de Implementação

+

+[Documento Swagger 2.0](https://swagger.io/docs/specification/2-0/basic-structure/)

+

+- [x] Estrutura básica

+- [x] Hospedeiro API e Caminho Base

+- [x] Caminhos e operações

+- [x] Descrição dos parâmetros

+- [x] Descrever o corpo do pedido

+- [x] Descrição das respostas

+- [x] Tipos MIME

+- [x] Autenticação

+ - [x] Autenticação básica

+ - [x] Chaves API

+- [x] Acrescentar exemplos

+- [x] Carregamento de ficheiros

+- [x] Enums

+- [x] Operações de Agrupamento com Etiquetas

+- Extensões Swagger

+

+## Formato dos comentários declarativos

+

+## Informações Gerais API

+

+**Exemplo**

+[celler/main.go](https://github.com/swaggo/swag/blob/master/example/celler/main.go)

+

+| anotação | descrição | exemplo |

+|-------------|--------------------------------------------|---------------------------------|

+| title | **Obrigatório.** O título da aplicação.| // @title Swagger Example API |

+| version | **Obrigatório.** Fornece a versão da aplicação API.| // @version 1.0 |

+| description | Uma breve descrição da candidatura. |// @descrição Este é um servidor servidor de celas de amostra. |

+| tag.name | Nome de uma tag.| // @tag.name Este é o nome da tag |

+| tag.description | Descrição da tag | // @tag.description Cool Description |

+| tag.docs.url | Url da Documentação externa da tag | // @tag.docs.url https://example.com|

+| tag.docs.description | Descrição da documentação externa da tag| // @tag.docs.description Melhor exemplo de documentação |

+| TermsOfService | Os Termos de Serviço para o API.| // @termsOfService http://swagger.io/terms/ |

+| contact.name | A informação de contacto para a API exposta.| // @contacto.name Suporte API |

+| contact.url | O URL que aponta para as informações de contacto. DEVE estar no formato de um URL. | // @contact.url http://www.swagger.io/support|

+| contact.email| O endereço de email da pessoa/organização de contacto. DEVE estar no formato de um endereço de correio electrónico.| // @contact.email support@swagger.io |

+| license.name | **Obrigatório.** O nome da licença utilizada para a API.|// @licença.name Apache 2.0|

+| license.url | Um URL para a licença utilizada para a API. DEVE estar no formato de um URL. | // @license.url http://www.apache.org/licenses/LICENSE-2.0.html |

+| host | O anfitrião (nome ou ip) que serve o API. | // @host localhost:8080 |

+| BasePath | O caminho de base sobre o qual o API é servido. | // @BasePath /api/v1 |

+| accept | Uma lista de tipos de MIME que os APIs podem consumir. Note que accept só afecta operações com um organismo de pedido, tais como POST, PUT e PATCH. O valor DEVE ser o descrito em [Tipos de Mime](#mime-types). | // @accept json |

+| produce | Uma lista de tipos de MIME que os APIs podem produce. O valor DEVE ser o descrito em [Tipos de Mime](#mime-types). | // @produce json |

+| query.collection.format | O formato padrão de param de colecção(array) em query,enums:csv,multi,pipes,tsv,ssv. Se não definido, csv é o padrão.| // @query.collection.format multi

+| schemes | O protocolo de transferência para a operação que separou por espaços. | // @schemes http https |

+| externalDocs.description | Descrição do documento externo. | // @externalDocs.description OpenAPI |

+| externalDocs.url | URL do documento externo. | // @externalDocs.url https://swagger.io/resources/open-api/ |

+| x-name | A chave de extensão, deve ser iniciada por x- e tomar apenas o valor json | // @x-example-key {"chave": "valor"} |

+

+### Usando descrições de remarcação para baixo

+Quando uma pequena sequência na sua documentação é insuficiente, ou precisa de imagens, exemplos de códigos e coisas do género, pode querer usar descrições de marcação. Para utilizar as descrições markdown, utilize as seguintes anotações.

+

+| anotação | descrição | exemplo |

+|-------------|--------------------------------------------|---------------------------------|

+| title | **Obrigatório.** O título da aplicação.| // @title Swagger Example API |

+| version | **Obrigatório.** Fornece a versão da aplicação API.| // @versão 1.0 |

+| description.markdown | Uma breve descrição da candidatura. Parsed a partir do ficheiro api.md. Esta é uma alternativa a @description |// @description.markdown Sem valor necessário, isto analisa a descrição do ficheiro api.md |.

+| tag.name | Nome de uma tag.| // @tag.name Este é o nome da tag |

+| tag.description.markdown | Descrição da tag esta é uma alternativa à tag.description. A descrição será lida a partir de um ficheiro nomeado como tagname.md | // @tag.description.markdown |

+

+## Operação API

+

+**Exemplo**

+[celler/controller](https://github.com/swaggo/swag/tree/master/example/celler/controller)

+

+| anotação | descrição |

+|-------------|----------------------------------------------------------------------------------------------------------------------------|

+| descrição | Uma explicação verbosa do comportamento da operação. |

+| description.markdown | Uma breve descrição da candidatura. A descrição será lida a partir de um ficheiro. Por exemplo, `@description.markdown details` irá carregar `details.md`| // @description.file endpoint.description.markdown |

+| id | Um fio único utilizado para identificar a operação. Deve ser única entre todas as operações API. |

+| tags | Uma lista de tags para cada operação API que separou por vírgulas. |

+| summary | Um breve resumo do que a operação faz. |

+| accept | Uma lista de tipos de MIME que os APIs podem consumir. Note que accept só afecta operações com um organismo de pedido, tais como POST, PUT e PATCH. O valor DEVE ser o descrito em [Tipos de Mime](#mime-types). |

+| produce | Uma lista de tipos de MIME que os APIs podem produce. O valor DEVE ser o descrito em [Tipos de Mime](#mime-types). |

+| param | Parâmetros que se separaram por espaços. `param name`,`param type`,`data type`,`is mandatory?`,`comment` `attribute(optional)` |

+| security | [Segurança](#security) para cada operação API. |

+| success | resposta de sucesso que separou por espaços. `return code or default`,`{param type}`,`data type`,`comment` |.

+| failure | Resposta de falha que separou por espaços. `return code or default`,`{param type}`,`data type`,`comment` |

+| response | Igual ao `sucesso` e `falha` |

+| header | Cabeçalho em resposta que separou por espaços. `código de retorno`,`{tipo de parâmetro}`,`tipo de dados`,`comentário` |.

+| router | Definição do caminho que separou por espaços. caminho",`path`,`[httpMethod]` |[httpMethod]` |

+| x-name | A chave de extensão, deve ser iniciada por x- e tomar apenas o valor json. |

+| x-codeSample | Optional Markdown use. tomar `file` como parâmetro. Isto irá então procurar um ficheiro nomeado como o resumo na pasta dada. |

+| deprecated | Marcar o ponto final como depreciado. |

+

+## Mime Types

+

+`swag` aceita todos os tipos MIME que estão no formato correcto, ou seja, correspondem `*/*`.

+Além disso, `swag` também aceita pseudónimos para alguns tipos de MIME, como se segue:

+

+

+| Alias | MIME Type |

+|-----------------------|-----------------------------------|

+| json | application/json |

+| xml | text/xml |

+| plain | text/plain |

+| html | text/html |

+| mpfd | multipart/form-data |

+| x-www-form-urlencoded | application/x-www-form-urlencoded |

+| json-api | application/vnd.api+json |

+| json-stream | application/x-json-stream |

+| octet-stream | application/octet-stream |

+| png | image/png |

+| jpeg | image/jpeg |

+| gif | image/gif |

+

+

+

+## Tipo de parâmetro

+

+- query

+- path

+- header

+- body

+- formData

+

+## Tipo de dados

+

+- string (string)

+- integer (int, uint, uint32, uint64)

+- number (float32)

+- boolean (bool)

+- file (param data type when uploading)

+- user defined struct

+

+## Segurança

+| anotação | descrição | parâmetros | exemplo |

+|------------|-------------|------------|---------|

+| securitydefinitions.basic | [Basic](https://swagger.io/docs/specification/2-0/authentication/basic-authentication/) auth. | | // @securityDefinitions.basicAuth | [Básico]()

+| securitydefinitions.apikey | [chave API](https://swagger.io/docs/specification/2-0/authentication/api-keys/) auth. | in, name, description | // @securityDefinitions.apikey ApiKeyAuth |